Using an Environment

Initializing

If the gym-anm environment you would like to use has already been registered in the gym’s registry

(see the Gym documentation), you can initialize it with

gym.make('gym_anm:<ENV_ID>'), where <ENV_ID> it the ID of the environment. For example:

import gym

env = gym.make('gym_anm:ANM6Easy-v0')

Note: all environments provided as part of the gym-anm package are automatically registered.

Alternatively, the environment can be initialized directly from its class:

from gym_anm.envs import ANM6Easy

env = ANM6Easy()

Agent-environment interactions

Built on top of Gym, gym-anm provides 2 core functions: reset() and

step(a).

reset() can be used to reset the environment and collect the first observation of the trajectory:

obs = env.reset()

After the agent has selected an action a to apply to the environment, step(a) can be used to do so:

obs, r, done, info = env.step(a)

where:

obsis the vector of observations \(o_{t+1}\),ris the reward \(r_t\),doneis a boolean value set totrueif \(s_{t+1}\) is a terminal state,infogathers information about the transition (it is seldom used ingym-anm).

Render the environment

Some gym-anm environments may support rendering through the render() and close() functions.

To update the visualization of the environment, the render method is called:

env.render()

To end the visualization and close all used resources:

env.close()

Currently, only gym-anm:ANM6Easy-v0 supports rendering.

Complete example

A complete example of agent-environment interactions with an arbitrary agent agent:

env = gym.make('gym_anm:ANM6Easy-v0')

o = env.reset()

for i in range(1000):

a = agent.act(o)

o, r, done, info = env.step(a)

env.render()

time.sleep(0.5) # otherwise the rendering is too fast for the human eye

if done:

o = env.reset()

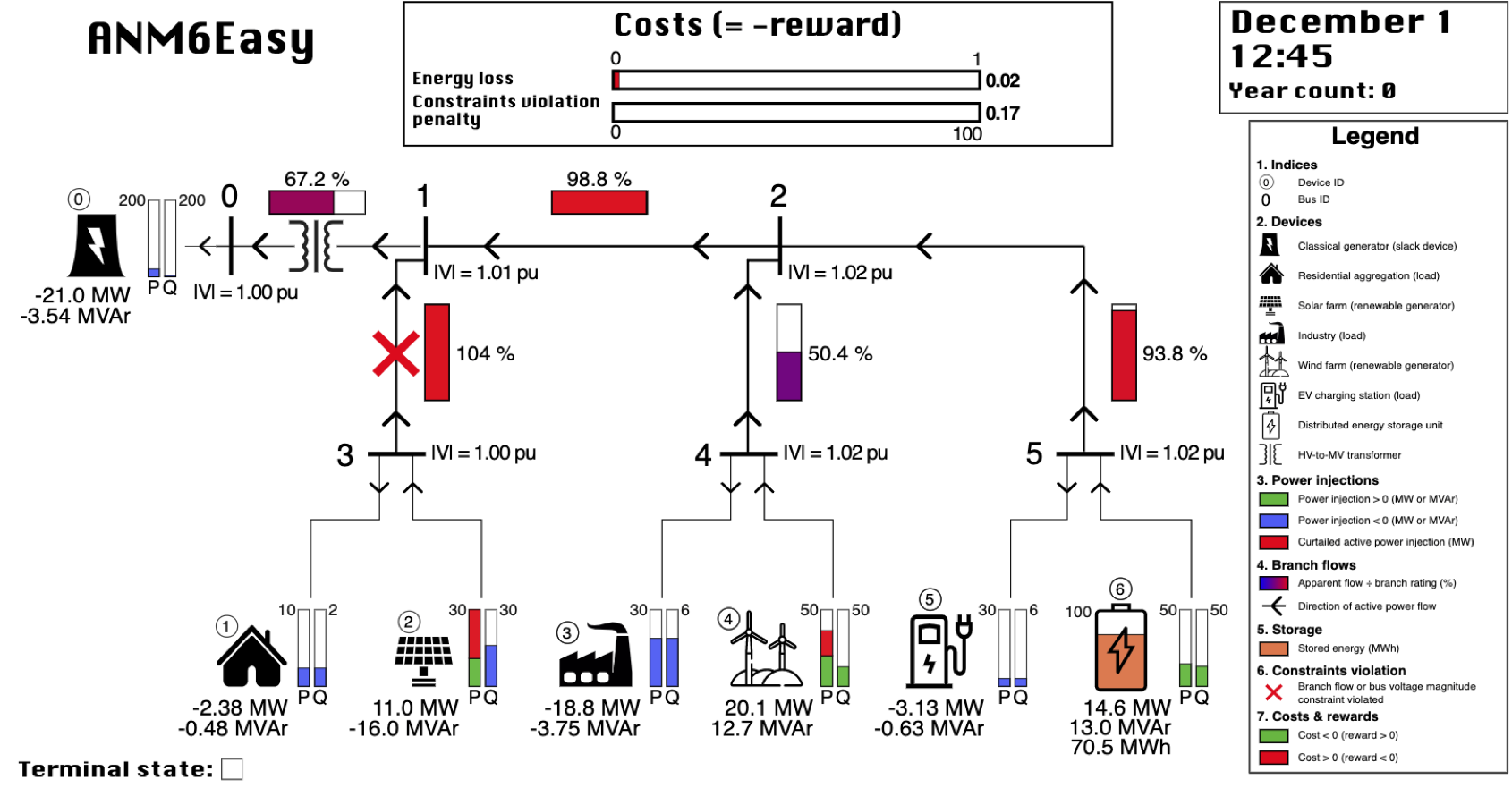

The above example would be rendered in your favorite web browser as: